Since I debuted the stepwise correlation algorithm, I suppose the punchline that people want to see is: does it actually work?

The short answer? Yes, it does.

A slightly longer answer: it works, With the caveat that having a better correlation algorithm that makes up 25% of the total sum of weighted ranks only has a marginal (but nevertheless positive) effect on returns. Furthermore, when comparing a max decorrelation weighting using default single-pass correlation vs. stepwise, the stepwise gives a bumpier ride, but one with visibly larger returns. Furthermore, for this universe, the difference between starting at the security ranked highest by the momentum and volatility components, or with the security that has the smallest aggregate correlation to all securities, is very small. Essentially, from my inspection, the answer to using stepwise correlation is: “it’s a perfectly viable alternative, if not better.”

Here are the functions used in the script:

require(quantmod)

require(PerformanceAnalytics)

stepwiseCorRank <- function(corMatrix, startNames=NULL, stepSize=1, bestHighestRank=FALSE) {

#edge cases

if(dim(corMatrix)[1] == 1) {

return(corMatrix)

} else if (dim(corMatrix)[1] == 2) {

ranks <- c(1.5, 1.5)

names(ranks) <- colnames(corMatrix)

return(ranks)

}

if(is.null(startNames)) {

corSums <- rowSums(corMatrix)

corRanks <- rank(corSums)

startNames <- names(corRanks)[corRanks <= stepSize]

}

nameList <- list()

nameList[[1]] <- startNames

rankList <- list()

rankCount <- 1

rankList[[1]] <- rep(rankCount, length(startNames))

rankedNames <- do.call(c, nameList)

while(length(rankedNames) < nrow(corMatrix)) {

rankCount <- rankCount+1

subsetCor <- corMatrix[, rankedNames]

if(class(subsetCor) != "numeric") {

subsetCor <- subsetCor[!rownames(corMatrix) %in% rankedNames,]

if(class(subsetCor) != "numeric") {

corSums <- rowSums(subsetCor)

corSumRank <- rank(corSums)

lowestCorNames <- names(corSumRank)[corSumRank <= stepSize]

nameList[[rankCount]] <- lowestCorNames

rankList[[rankCount]] <- rep(rankCount, min(stepSize, length(lowestCorNames)))

} else { #1 name remaining

nameList[[rankCount]] <- rownames(corMatrix)[!rownames(corMatrix) %in% names(subsetCor)]

rankList[[rankCount]] <- rankCount

}

} else { #first iteration, subset on first name

subsetCorRank <- rank(subsetCor)

lowestCorNames <- names(subsetCorRank)[subsetCorRank <= stepSize]

nameList[[rankCount]] <- lowestCorNames

rankList[[rankCount]] <- rep(rankCount, min(stepSize, length(lowestCorNames)))

}

rankedNames <- do.call(c, nameList)

}

ranks <- do.call(c, rankList)

names(ranks) <- rankedNames

if(bestHighestRank) {

ranks <- 1+length(ranks)-ranks

}

ranks <- ranks[colnames(corMatrix)] #return to original order

return(ranks)

}

FAAreturns <- function(prices, monthLookback = 4,

weightMom=1, weightVol=.5, weightCor=.5,

riskFreeName="VFISX", bestN=3,

stepCorRank = FALSE, stepStartMethod=c("best", "default")) {

stepStartMethod <- stepStartMethod[1]

returns <- Return.calculate(prices)

monthlyEps <- endpoints(prices, on = "months")

riskFreeCol <- grep(riskFreeName, colnames(prices))

tmp <- list()

dates <- list()

for(i in 2:(length(monthlyEps) - monthLookback)) {

#subset data

priceData <- prices[monthlyEps[i]:monthlyEps[i+monthLookback],]

returnsData <- returns[monthlyEps[i]:monthlyEps[i+monthLookback],]

#perform computations

momentum <- data.frame(t(t(priceData[nrow(priceData),])/t(priceData[1,]) - 1))

priceData <- priceData[, momentum > 0] #remove securities with momentum < 0

returnsData <- returnsData[, momentum > 0]

momentum <- momentum[momentum > 0]

names(momentum) <- colnames(returnsData)

vol <- as.numeric(-sd.annualized(returnsData))

if(length(momentum) > 1) {

#perform ranking

if(!stepCorRank) {

sumCors <- -colSums(cor(returnsData, use="complete.obs"))

stats <- data.frame(cbind(momentum, vol, sumCors))

ranks <- data.frame(apply(stats, 2, rank))

weightRankSum <- weightMom*ranks$momentum + weightVol*ranks$vol + weightCor*ranks$sumCors

names(weightRankSum) <- rownames(ranks)

} else {

corMatrix <- cor(returnsData, use="complete.obs")

momRank <- rank(momentum)

volRank <- rank(vol)

compositeMomVolRanks <- weightMom*momRank + weightVol*volRank

maxRank <- compositeMomVolRanks[compositeMomVolRanks==max(compositeMomVolRanks)]

if(stepStartMethod=="default") {

stepCorRanks <- stepwiseCorRank(corMatrix=corMatrix, startNames = NULL,

stepSize = 1, bestHighestRank = TRUE)

} else {

stepCorRanks <- stepwiseCorRank(corMatrix=corMatrix, startNames = names(maxRank),

stepSize = 1, bestHighestRank = TRUE)

}

weightRankSum <- weightMom*momRank + weightVol*volRank + weightCor*stepCorRanks

}

totalRank <- rank(weightRankSum)

#find top N values, from http://stackoverflow.com/questions/2453326/fastest-way-to-find-second-third-highest-lowest-value-in-vector-or-column

#thanks to Dr. Rob J. Hyndman

upper <- length(names(returnsData))

lower <- max(upper-bestN+1, 1)

topNvals <- sort(totalRank, partial=seq(from=upper, to=lower))[c(upper:lower)]

#compute weights

longs <- totalRank %in% topNvals #invest in ranks length - bestN or higher (in R, rank 1 is lowest)

longs <- longs/sum(longs) #equal weight all candidates

longs[longs > 1/bestN] <- 1/bestN #in the event that we have fewer than top N invested into, lower weights to 1/top N

names(longs) <- names(totalRank)

} else if(length(momentum) == 1) { #only one security had positive momentum

longs <- 1/bestN

names(longs) <- names(momentum)

} else { #no securities had positive momentum

longs <- 1

names(longs) <- riskFreeName

}

#append removed names (those with momentum < 0)

removedZeroes <- rep(0, ncol(returns)-length(longs))

names(removedZeroes) <- names(returns)[!names(returns) %in% names(longs)]

longs <- c(longs, removedZeroes)

#reorder to be in the same column order as original returns/prices

longs <- data.frame(t(longs))

longs <- longs[, names(returns)]

#append lists

tmp[[i]] <- longs

dates[[i]] <- index(returnsData)[nrow(returnsData)]

}

weights <- do.call(rbind, tmp)

dates <- do.call(c, dates)

weights <- xts(weights, order.by=as.Date(dates))

weights[, riskFreeCol] <- weights[, riskFreeCol] + 1-rowSums(weights)

strategyReturns <- Return.rebalancing(R = returns, weights = weights, geometric = FALSE)

colnames(strategyReturns) <- paste(monthLookback, weightMom, weightVol, weightCor, sep="_")

return(strategyReturns)

}

The FAAreturns function has been modified to transplant the stepwise correlation algorithm I discussed earlier. Essentially, the chunk of code that performs the ranking inside the function got a little bit larger, and some new arguments to the function have been introduced.

First off, there’s the option to use the stepwise correlation algorithm in the first place–namely, the stepCorRank defaulting to FALSE (the default settings replicate the original FAA idea demonstrated in the first post on this idea). However, the argument that comes next, the stepStartMethod argument does the following:

Using the “default” setting, the algorithm will start off using the security that is simply least correlated among the securities (that is, the lowest sum of correlations among securities). However, the “best” setting instead will use the weighted sum of ranks using the prior two factors (momentum and volatility). This argument defaults to using the best security (aka the one best ranked prior by the previous two factors), as opposed to the default. At the end of the day, I suppose the best way of illustrating functionality is with some examples of taking this piece of engineering out for a spin. So here goes!

mutualFunds <- c("VTSMX", #Vanguard Total Stock Market Index

"FDIVX", #Fidelity Diversified International Fund

"VEIEX", #Vanguard Emerging Markets Stock Index Fund

"VFISX", #Vanguard Short-Term Treasury Fund

"VBMFX", #Vanguard Total Bond Market Index Fund

"QRAAX", #Oppenheimer Commodity Strategy Total Return

"VGSIX" #Vanguard REIT Index Fund

)

#mid 1997 to end of 2012

getSymbols(mutualFunds, from="1997-06-30", to="2012-12-31")

tmp <- list()

for(fund in mutualFunds) {

tmp[[fund]] <- Ad(get(fund))

}

#always use a list hwne intending to cbind/rbind large quantities of objects

adPrices <- do.call(cbind, args = tmp)

colnames(adPrices) <- gsub(".Adjusted", "", colnames(adPrices))

original <- FAAreturns(adPrices, stepCorRank=FALSE)

originalSWCbest <- FAAreturns(adPrices, stepCorRank=TRUE)

originalSWCdefault <- FAAreturns(adPrices, stepCorRank=TRUE, stepStartMethod="default")

stepMaxDecorBest <- FAAreturns(adPrices, weightMom=.05, weightVol=.025,

weightCor=1, riskFreeName="VFISX",

stepCorRank = TRUE, stepStartMethod="best")

stepMaxDecorDefault <- FAAreturns(adPrices, weightMom=.05, weightVol=.025,

weightCor=1, riskFreeName="VFISX",

stepCorRank = TRUE, stepStartMethod="default")

w311 <- FAAreturns(adPrices, weightMom=3, weightVol=1, weightCor=1, stepCorRank=TRUE)

originalMaxDecor <- FAAreturns(adPrices, weightMom=0, weightVol=1, stepCorRank=FALSE)

tmp <- cbind(original, originalSWCbest, originalSWCdefault,

stepMaxDecorBest, stepMaxDecorDefault, w311, originalMaxDecor)

names(tmp) <- c("original", "originalSWCbest", "originalSWCdefault", "SMDB",

"SMDD", "w311", "originalMaxDecor")

charts.PerformanceSummary(tmp, colorset=c("black", "orange", "blue", "purple", "green", "red", "darkred"))

statsTable <- data.frame(t(rbind(Return.annualized(tmp)*100, maxDrawdown(tmp)*100, SharpeRatio.annualized(tmp))))

statsTable$ReturnDrawdownRatio <- statsTable[,1]/statsTable[,2]

Same seven securities as the original paper, with the following return streams:

Original: the FAA original replication

originalSWCbest: original weights, stepwise correlation algorithm, using the best security as ranked by momentum and volatility as a starting point.

originalSWCdefault: original weights, stepwise correlation algorithm, using the default (minimum sum of correlations) security as a starting point.

stepMaxDecorBest: a max decorrelation algorithm that sets the momentum and volatility weights at .05 and .025 respectively, compared to 1 for correlation, simply to get the best starting security through the first two factors.

stepMaxDecorDefault: analogous to originalSWCdefault, except with the starting security being defined as the one with minimum sum of correlations.

w311: using a weighting of 3, 1, and 1 on momentum, vol, and correlation, respectively, while using the stepwise correlation rank algorithm, starting with the best security (the default for the function), since I suspected that not weighing momentum at 1 or higher was the reason any other equity curves couldn’t top out above the paper’s original.

originalMaxDecor: max decorrelation using the original 1-pass correlation matrix

Here is the performance chart:

Here’s the way I interpret it:

Does David Varadi’s stepwise correlation ranking algorithm help performance? From this standpoint, the answers lead to yes. Using the original paper’s parameters, the performance over the paper’s backtest period is marginally better in terms of the equity curves. Comparing max decorrelation algorithms (SMDB and SMDD stand for stepwise max decorrelation best and default, respectively), the difference is even more clear.

However, I was wondering why I could never actually outdo the original paper’s annualized return, and out of interest, decided to more heavily weigh the momentum ranking than the original paper eventually had it set at. The result is a bumpier equity curve, but one that has a higher annualized return than any of the others. It’s also something that I didn’t try in my walk-forward example (though interested parties can simply modify the original momentum vector to contain a 1.5 weight, for instance).

Here’s the table of statistics for the different permutations:

> statsTable

Annualized.Return Worst.Drawdown Annualized.Sharpe.Ratio..Rf.0.. ReturnDrawdownRatio

original 14.43802 13.15625 1.489724 1.097427

originalSWCbest 14.70544 13.15625 1.421045 1.117753

originalSWCdefault 14.68145 13.37059 1.457418 1.098041

SMDB 13.55656 12.33452 1.410072 1.099075

SMDD 13.18864 11.94587 1.409608 1.104033

w311 15.76213 13.85615 1.398503 1.137555

originalMaxDecor 11.89159 11.68549 1.434220 1.017637

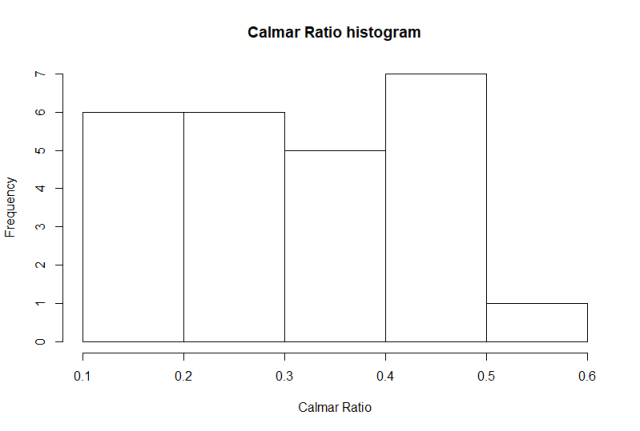

At the end of the day, all of the permutations exhibit solid results, and fall along different ends of the risk/return curve. The original settings exhibit the highest Sharpe Ratio (barely), but not the highest annualized return to max drawdown ratio (which surprisingly, belongs to the setting that overweights momentum).

To wrap this analysis up (since there are other strategies that I wish to replicate), here is the out-of-sample performance of these seven strategies (to Oct 30, 2014):

Maybe not the greatest thing in the world considering the S&P has made some spectacular returns in 2013, but nevertheless, the momentum variant strategies established new equity highs fairly recently, and look to be on their way up from their latest slight drawdown. Here are the statistics for 2013-2014:

statsTable <- data.frame(t(rbind(Return.annualized(tmp["2013::"])*100, maxDrawdown(tmp["2013::"])*100, SharpeRatio.annualized(tmp["2013::"]))))

statsTable$ReturnDrawdownRatio <- statsTable[,1]/statsTable[,2]

> statsTable

Annualized.Return Worst.Drawdown Annualized.Sharpe.Ratio..Rf.0.. ReturnDrawdownRatio

original 9.284678 8.259298 1.1966581 1.1241485

originalSWCbest 8.308246 9.657667 0.9627413 0.8602746

originalSWCdefault 8.916144 8.985685 1.0861781 0.9922609

SMDB 6.406438 9.657667 0.8366559 0.6633525

SMDD 5.641980 5.979313 0.7840507 0.9435833

w311 8.921268 9.025100 1.0142871 0.9884953

originalMaxDecor 2.888778 6.670709 0.4244202 0.4330542

So, the original parameters are working solidly, the stepwise correlation algorithm seems to be in a slight rut, and the variants without any emphasis on momentum simply aren’t that great (they were created purely as illustrative tools to begin with). Whether you prefer to run FAA with these securities, or with trading strategies of your own, my only caveat is that transaction costs haven’t been taken into consideration (from what I hear, interactive brokers charges you $1 per transaction, so it shouldn’t make a world of a difference), but beyond that, I believe these last four posts have shown that FAA is something that works. While it doesn’t always work perfectly (EG the S&P 500 had a very good 2013), the logic is sound, and the results are solid, even given some rather plain-vanilla type securities.

In any case, I think I’ll conclude with the fact that FAA works, and the stepwise correlation algorithm provides a viable alternative to computing your weights. I’ll update my IKTrading package with some formal documentation regarding this algorithm soon.

Thanks for reading.